Programming note from Oren: As noted on Friday, I’ve been on vacation the past few days, so today we have a first-ever Understanding America guest post, from American Compass executive director Abigail Ball. A couple of weeks ago, I made the case that AI appears set to be the next general purpose technology but not to achieve “superintelligence” or upend human society. Abby disagrees, and has written here the case that the latest wave of breakthroughs supports a high level of confidence that This Time Is Different. We report, you decide.

By Abigail Ball

There is admittedly a lot of hype around AI right now, which makes it easy to be skeptical. Everything is “AI-powered” or “built with AI.” My new iPhone was allegedly purpose-built for Apple Intelligence (A.I. Get it?). Just as we no longer hear much about “the Uber for [fill the blank],” this AI-related marketing boom will taper off eventually. But what will we be left with?

Recent breakthroughs in AI reasoning capabilities suggest we’re entering a new phase in computing—one where machines don’t just recognize patterns but can actually think through problems step by step, much as humans do. This has the potential to reshape everything from education to science to health care to our legal system. What that world will look like is impossible to predict in detail, but we should be thinking now about how to prepare for a future meaningfully shaped by the deep learning revolution and its progeny.

First, some key terms. Artificial intelligence encompasses everything from spam filters to image generators, all of which are built on the same breakthrough of “deep learning”—basically, teaching computers to recognize patterns by processing data through multiple layers of a neural network, similar to how the human brain learns to recognize speech or faces.

At the frontier of this technology are large language models that can engage in complex reasoning. Terms like “artificial general intelligence” (AGI) and “superintelligence” are often used in these discussions, but they’re contested concepts that can obscure more than they illuminate. More useful is the concept of “powerful AI”—systems that can match or exceed human performance across a range of intellectual tasks while working virtually. As defined by Anthropic CEO Dario Amodei in his excellent essay, “Machines of Loving Grace,” powerful AI would:

Be smarter than a Nobel Prize winner across many fields, with the ability to “prove unsolved mathematical theorems, write extremely good novels, write difficult codebases from scratch, etc.”

Be able to do all the things a human can do working virtually (take actions online, make phone calls, etc.)

Act as a long-term agent, working on projects over hours or even weeks

Not necessarily be physically embodied but have the ability to use real-world tools and robotics via computer

Be fast, with the ability to “run millions of instances of” an action, though ultimately “limited by the response time of the physical world or of software it interacts with”

Work independently or cooperatively across its many instances, “perhaps with different subpopulations fine-tuned to be especially good at particular tasks”

Amodei summarizes this vision as a “country of geniuses in a datacenter.”

Where Are We Now?

The most significant recent breakthrough in AI has been the emergence of models that can tackle problems step by step. Rather than simply pattern-matching or predicting what comes next, these systems engage in structured reasoning—considering options, evaluating trade-offs, and arriving at novel conclusions.

The capability first emerged in OpenAI’s o-series models and has since been demonstrated by others like DeepMind’s Gemini Flash Experimental and DeepSeek-R1. What makes these models different is their ability to carry out internal “discussions” before providing an answer. When asked a complex question—say, about health care policy reform—they don’t simply regurgitate training data but instead work through various approaches, considering pros and cons, and synthesizing novel solutions.

For example, as part of DeepSeek-R1’s chain of thought in response to a prompt about reforming U.S. health care, it wrote:

But wait, would this be feasible? The public option has been proposed before but failed due to opposition. Maybe if it’s introduced in stages, like first making it available on the ACA marketplaces, then expanding. Also, using state-level initiatives where possible. For example, allowing states to implement their own public options with federal support, like some states have done. Then, over time, more states adopt it, creating a de facto national system. That might be more feasible than a federal overhaul.

You can read the full reasoning chain here.

Source: https://openai.com/index/learning-to-reason-with-llms/

A remarkable feature of reasoning models is that, with more time to work through a problem, they perform much better on benchmarks. What’s more, rather than lose clarity in the process of compression (like squeezing a high-resolution photo into a smaller file size), the responses produced by these models are qualitatively better than their training data. While older models effectively took a “picture of a picture” and spit back out some lossy version of their training, these new models go beyond what they originally learned—like a lawyer who, informed by his law school training, writes a brief presenting a novel legal theory. This ability to reason through problems and generate novel solutions is beginning to look like genuine thinking.

In DeepSeek’s paper introducing their R1 model, the researchers describe how this process works:

This improvement is not the result of external adjustments but rather an intrinsic development within the model. DeepSeek-R1-Zero naturally acquires the ability to solve increasingly complex reasoning tasks by leveraging extended test-time computation. … This moment is not only an “aha moment” for the model but also for the researchers observing its behavior. It underscores the power and beauty of reinforcement learning: rather than explicitly teaching the model on how to solve a problem, we simply provide it with the right incentives, and it autonomously develops advanced problem-solving strategies.

With this reasoning ability, models like o1 and R1 can solve much harder problems—particularly in areas like math and code—than any previous language model. What’s more, their solutions to these problems can themselves be used to train future models to solve yet harder problems. Previously, developers would have needed to pay human experts to write down detailed solutions to problems to get this kind of data. Now, it can be automated.

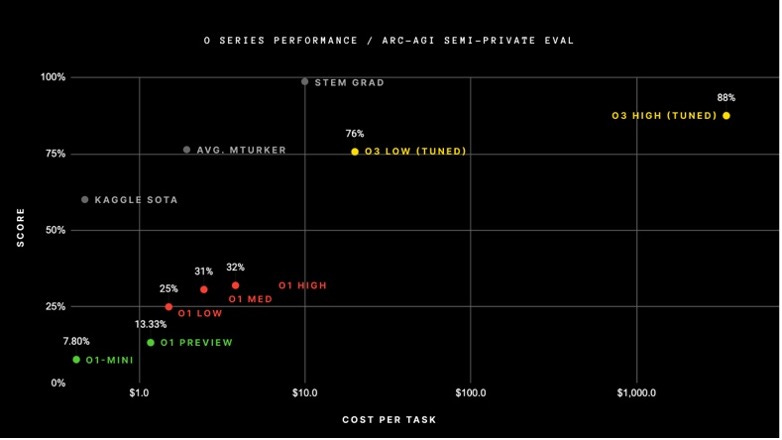

This virtuous cycle is already in motion: OpenAI’s o3 model, set to be released just a few months after o1, offers dramatically better performance yet again.

Source: https://arcprize.org/blog/oai-o3-pub-breakthrough

In 2020, GPT-3 got a score of 0% on the ARC-AGI test, a benchmark that is yet to be defeated by any model. As its creators describe it, the goal of this test is to “measure the efficiency of AI skill-acquisition on unknown tasks.” Rather than just measure skills that a model was trained to perform, ARC-AGI describes intelligence as the ability to “efficiently acquire new skills”—in other words, an intelligent model can “adapt to new problems it has not seen before and that its creators (developers) did not anticipate.”

Four years after GPT-3’s uninspiring performance, GPT-4o eked out a 5%. Then, o1 blew that out of the water with a score of about 30%. Within months, o3 received a passing grade: 76% when using ARC’s compute limit, and 88% when the model used additional computing resources. OpenAI reports that o1 made similar improvements over GPT-4o on a range of benchmarks, including in coding ability.

While benchmark performance alone doesn’t tell the whole story, the dramatic improvement on ARC-AGI and other scores suggests something qualitatively different is happening with these new models.

What’s Next

Thus far, there’s no evidence that AI developers are hitting a wall—if anything, in the words of OpenAI cofounder Ilya Sutskever, it’s becoming clearer every day that the models just want to learn.

When it comes to the models themselves, two areas give me the most reason for optimism: reasoning and agents.

An important consequence of the latest breakthroughs in reasoning models is that their continued improvement does not necessarily depend upon throwing more data at them or making them ever larger. With the ability to think, these models have added a new vector: time. What will they be able to accomplish when given even more time to reason through complex questions? What happens when minutes of reasoning time becomes hours, days, or even weeks? And then consider that they will simultaneously be getting more efficient as computing gets faster: what now takes two minutes to reason through will at some point take a minute, 30 seconds, a moment.

Intimately tied to this reasoning ability is the question of agentic AI—in other words, models that can take action in the real world and work on more complex tasks. Colloquially, this is the idea of creating a personal assistant for every human. But that doesn’t just mean a chatbot that makes your dentist appointments. With AI agents, many areas of the economy could be supercharged. OpenAI just released Operator, its first model capable of using the internet, a few days ago. In the future, these are likely to be extremely smart, fast, tireless, and thoughtful creatures that work alongside you. What would you ask them to do? A scientific researcher could treat one like a graduate student, handing it a hefty question and leaving it to work through potential answers, asking for clarification whenever necessary. A small business owner might use one as a consultant, providing it with all the business’s internal information, describing challenges, and asking for plans and strategies for everything from marketing to logistics.

Where Are the Limits?

Predicting future developments from current capabilities requires extrapolation, so the question becomes whether we should expect progress to continue on its current trajectory, accelerate, or slow. Some (like Oren) argue that we are more likely on an S-curve that will eventually plateau, as has been the case with the gains from previous technological breakthroughs. This is likely true of any particular breakthrough but fails to appreciate the pattern of progress now underway. Like Moore’s Law in the semiconductor industry, AI progress is likely to proceed through a series of breakthroughs, each opening up new possibilities just as previous approaches reach their limits.

Consider how semiconductor manufacturing has evolved: when one approach hits physical limits, researchers find entirely new ways forward—moving from traditional lithography to extreme ultraviolet (EUV), developing new transistor architectures when quantum effects became problematic. Similarly, AI has already jumped from one paradigm (pattern recognition through massive pre-training) to another (reasoning through internal dialogue). Each breakthrough opens up new horizons before we hit the limitations of current approaches. To quote OpenAI CEO Sam Altman, “there is no wall.”

This does require a bit of faith, but it’s worth remembering that Gordon Moore (co-founder of Fairchild Semiconductor and Intel) made his eponymous prediction-cum-law in 1975. He had no way of knowing that EUV lithography would someday be the key to pushing the semiconductor industry forward, just as we don’t know what breakthroughs will keep the AI industry moving in a year or five years’ time.

But while Altman may be correct about the lack of a technical wall, a world transformed by powerful AI is still a world filled with humans. Not everything can be solved by intelligence alone. In a democratic society, we don’t just do what’s most efficient. Sometimes that’s a good thing, and other times it means the loudest voices keep us from building more housing. When it comes to AI, it means that adoption will be uneven. In sclerotic areas, like defense procurement, AI has plenty of potential to rationalize our approach, but the reality is that the logjam is human, not technical. Until we find the political cajónes to fix the problem, nothing will change.

This also applies in the private sector, where adoption depends on thousands of individual decisionmakers: IT directors and chief technology officers and small business owners will all have to figure out what it means to adopt AI in the day-to-day lives of their businesses. This will take time. Diffusion across the economy will be uneven, which means that labor market effects will likely be stretched out over a fairly long period, as companies figure out what AI can and cannot do for them (or perhaps more importantly, what they trust it to do for them—I expect that it will take quite a while for people to fully trust these systems with economically valuable decision-making).

The labor market effects of AI are some of the most difficult to predict, but the key point to remember is that a system with 90% of human capabilities is nothing like one that reaches 100%. An AI system that can do almost all of your job still can’t do your job. This is where productivity growth comes from: when you only need to do 10% of the job you once did, now you can do much more with your time, focusing on the areas where human intelligence, judgment, and social capital are most valuable—and enabling you to do entirely new things for which we do not yet even have words. Unless AI can do 100% of the job, the “world without work” vision of some AI thinkers is quite far off, if even in the cards.

For most of us, the question isn’t whether to embrace or reject AI, but how to think clearly about its development and prepare for its impacts. While we can’t predict exactly how these capabilities will evolve, we can already see enough to know that something big is on the horizon. The time to start adjusting our expectations is before the next breakthrough, not after.

Great post—I agree, especially on labor market effects. I think we're on track to get a system capable of automating ~99% of remote work within 5 years—but diffusion of this capability throughout the economy might well take a decade+.

Initially, it will be expensive to run, due to lack of compute. Then, there is the fact that many large corps are terminally resistant to adopting new technology that may disrupt their ways of working, and bureaucracies will find all sorts of excuses not to adopt. Fast-moving startups who can stay lean due to a high degree of automation might well displace larger slower companies with thousands of employees.

"They" don't "want" anything.

"when you only need to do 10% of the job you once did, now you can do much more with your time, focusing on the areas where human intelligence, judgment, and social capital are most valuable", i.e., the difficult, interesting work that brings a little meaning to your day will be automated while you spend even more time in meetings.